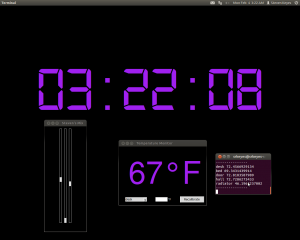

If you walk into my room, you might see an interesting interface on my desktop:

I’m referencing the clock and the mixer, not Unity :p

These are two tools I made freshman year: a unique clock and an audio mixer. They run on my desktop, an old HP computer that I got off reuse. I made the clock first, so I’ll describe it first and save the mixer for a later post.

Background

Laypeople count “1, 2, 3, …”; programmers count “0, 1, 2, 3, …”. However timekeepers must be really terrible at counting because, for each am or pm period, they count “12, 1, 2, 3, …”! A couple standards for time exist around MIT, whose students often require timekeeping that is unambiguous even during the night hours.

Take for example Random Standard Time (RST), created to accommodate the waking hours of residents of Random Hall. In RST, each day starts at 6:00 and ends at 30:00. 6:00 RST aligns with 6:00am of that day while 30:00 is actually 6:00am the next morning. This way, residents can avoid the inconvenience of day changes at midnight.

Another example is the practice of adding a delay to events around midnight to avoid the confusion of day changes. Suppose, for example, I have a pset due 9am Wednesday. If I want to tool with my friends 7 hours before the pset is due, it is ambiguous what day to tell my friends to join me. To employ the delay strategy, I would say to meet 11:59 Tuesday night, which falls unambiguously under a certain 24 hour period. Then, I would add that we should be 2 hours late, thus meeting at the desired time. One alternative phrasing, meeting at “2AM Tuesday night”, sounds a lot like meeting at “2AM Tuesday”, which would allow far too little punting!

Because hackers are notoriously late, a 15 minute delay is sometimes called “Hacker Standard Time”, so the phrase “HST” can be implemented when using the delay strategy described above to concisely remove ambiguity for events around midnight.

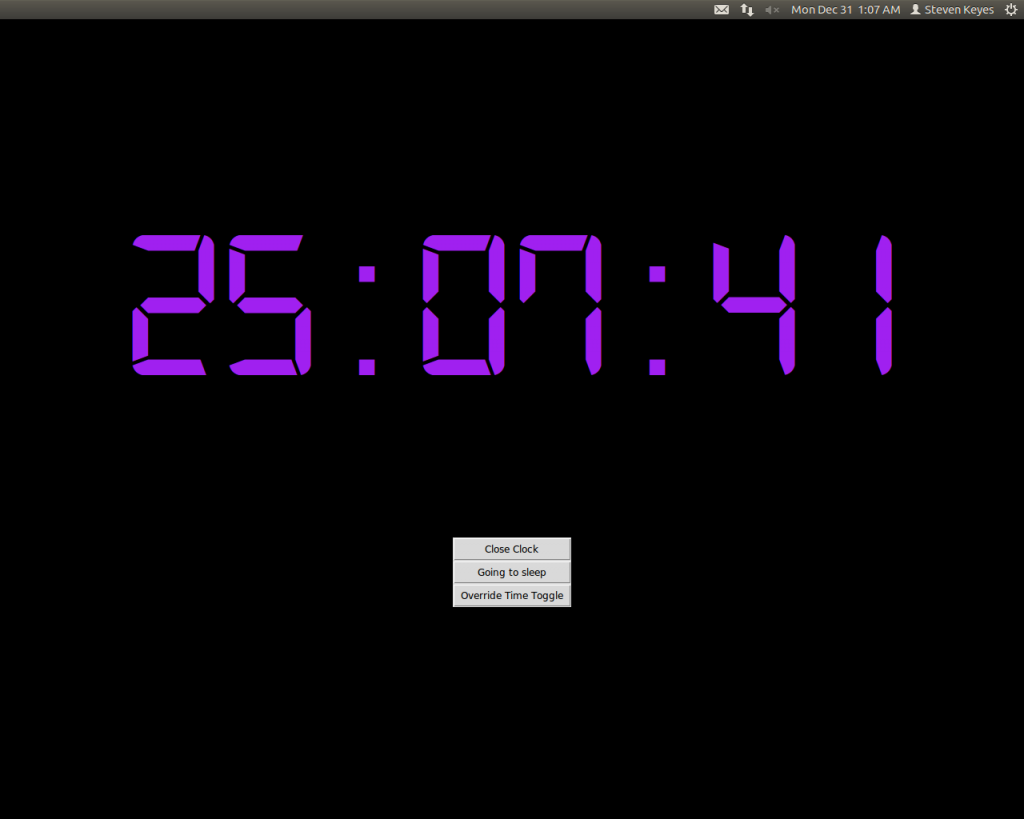

Beyond these standards, there’s lots of cool ways to count time. One more practice listed in the Wikipedia article is to continue counting past 23:59 to express nighttime am hours as 24:00, 25:00, 26:00, etc, and this is the basis for my clock.

Besides timekeeping, RST also implies a system of datekeeping in which every day is still 24 hours but each day is shifted. However, the following rule is my favorite system for datekeeping: it is considered a new day if at least two of the following happen; otherwise, it’s still night of the previous day.

- You eat breakfast.

- The sun rises.

- You wake up.

This system is great because, even if you pull an all-nighter, you are still guaranteed a new day as long as you eat something that you consider breakfast, and there is a new sun in the sky. Conversely, maybe you slept all afternoon, woke up at 11:00pm, and you’d like it to be tomorrow already so you can start feeling productive. Simply chug some cereal and you’re golden.

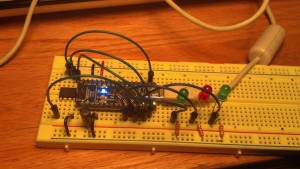

Implementation

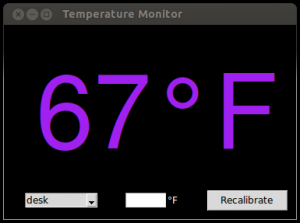

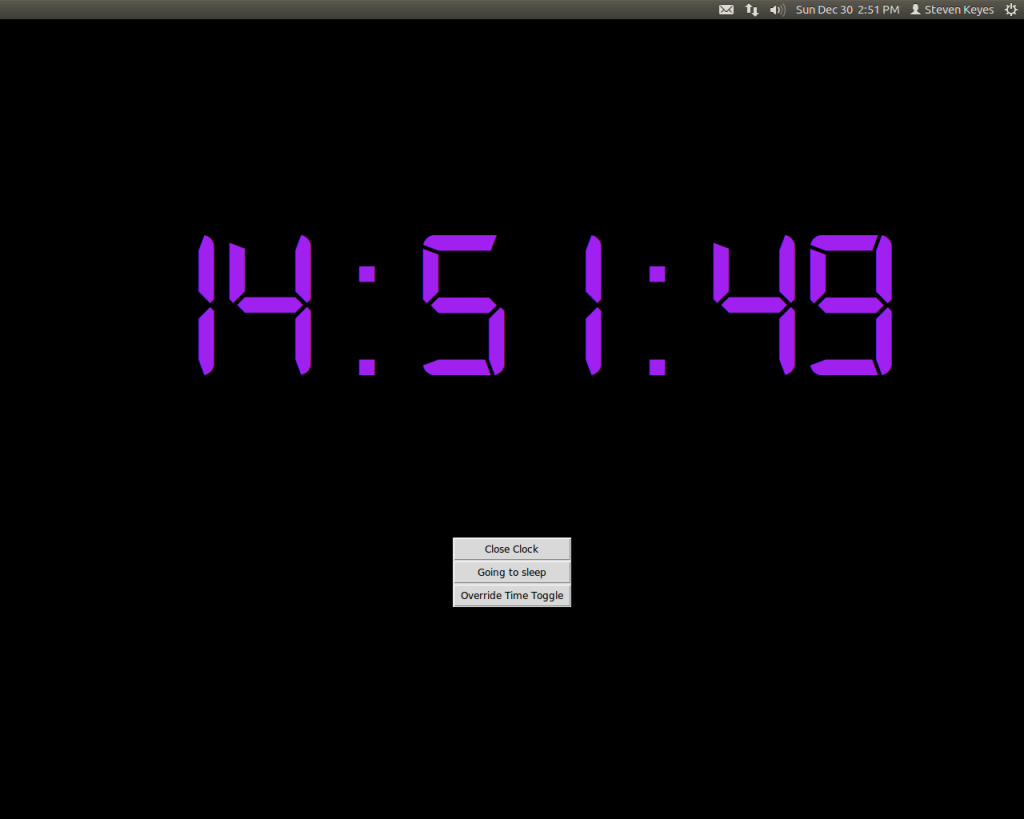

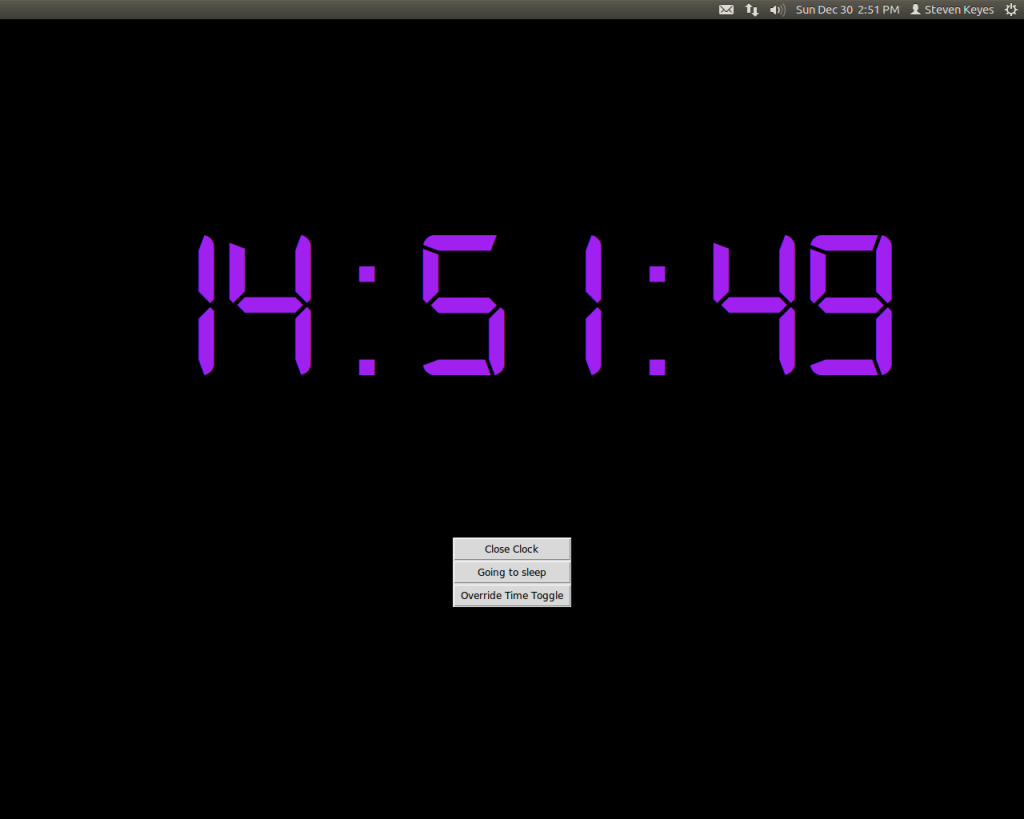

Regardless of how you keep dates or time, I wanted to have some fun making a unique clock for my room, and I wanted to practice some of the Python I had been learning in classes like IAP’s 6.S189, so I made this clock. I used the tkinter and time modules for the first time. I also tried Python 3 instead of Python 2, which we used in class. The font is Digital-7, which is free for personal use. I made the font large enough that I could read it from bed without glasses, which is more than I can say for my cell phone or alarm clock.

Example shot at 2:51pm

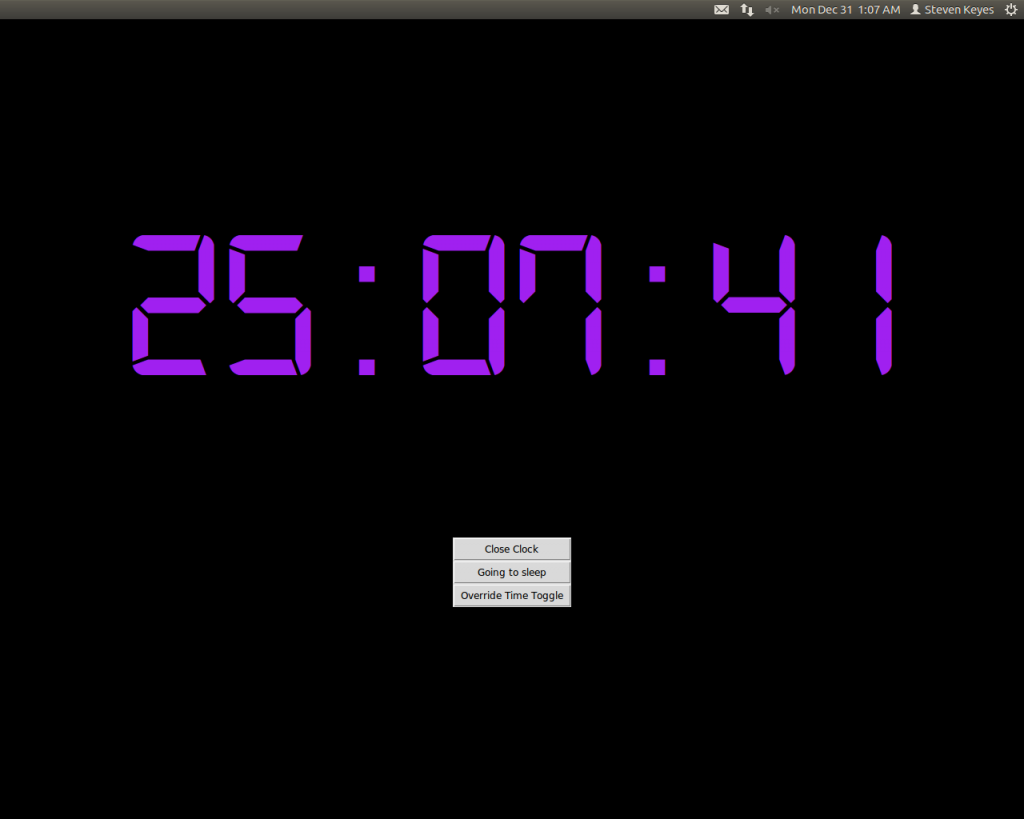

For my clock, the time starts synced with normal time when I wake up. Rather than am or pm, the clock runs from 0:00 to 23:59. Then, at midnight, it keeps counting up through 24:00, 25:00, etc until I press a button to tell it that I’m going to sleep. When I wake up, I press a button to tell it, and the clock re-syncs with standard time.

Example shot late in the night of 30 December; you can see in the top left that it’s actually 1:07am on 31 December

To do this, the program toggles a Boolean at midnight to indicate that the time is extended from the previous day. The “Going to sleep” button seen above disables this toggle, so the clock rolls over to 0:00 at midnight. A “Waking up” button, which appears in place of the “Going to sleep button” re-enables the toggle for the next night. The “Override” button seen above manually toggles the variable, and the “Close Clock” button closes the clock.

The “going to sleep” changes to a “waking up” button after being pressed

For my curiosity, I’d also like to implement a program that keeps a log of when I sleep. However, the interface for this would probably be more convenient on a phone or some device that I carry with me regardless of where I sleep, so a program on my desktop is not convenient. I plan to link this my clock to reset it remotely.

P.S. even if you’re away from your dorm room for winter break, you can still apparently ssh into your desktop and take screenshots: xwd -out screenshot.xwd -root -display :0.0